Types of Performance Testing

The target audiences for this article is product managers, project managers, performance test engineers and any one who cares about identifying performance issues in their application(s) before releasing it to market. This article helps users in identifying the correct type(s) of performance testing that needs to be conducted on their application.

Frequent releases of applications these days are not a fashion but have become a necessity for businesses to survive. Bringing quality products out in the market quickly makes software testing also challenging. In addition to performing functional testing in short span of time, organizations have to consider non functional testing also like performance, security, compatibility, localization etc. Because of the high cost involved in terms of infrastructure, skill set, duration etc. in non functional testing, outsourcing it to other organizations makes business sense most of the time, if done correctly.

Performance testing is conducted to mitigate the risk of availability, reliability, scalability, responsiveness, stability etc of the system. Like any other testing project, there are many activities involved in any performance testing project. Few of the important activities involved in any performance testing projects are identification of important business scenarios, identification of correct business scenarios mix, identification of correct workload, identification of right tools / strategy for load generation, setting up the test environment, designing the scripts for emulating business scenarios, preparing and populating the right amount of data, identification of proper performance counters / metrics to collect, designing the report template(s) for different stakeholders as per their need and execution of multiple performance runs as per the project requirement.

In performance testing there are many types of tests that can be conducted on any application / system. The type of test or run depends upon the performance requirements. In this article, I have listed down the importance of various performance test types in terms of technical and business perspective, which can be considered during multiple stages of performance testing projects. The definitions of run types might differ from other sources, but the idea is not to define but to logically divide or categorize the performance runs. Saying this, I have tried my best to adhere to the definition as close as possible from other sources. The categories are defined in terms of different performance run cycles in any performance testing project. For example in a project, load run might be performed first and Soak / Endurance testing run (with same work load) later if required.

Following is the summary of ten different performance types that can be referred to and selected for performance testing as per the business requirements. The details of these performance test types are described in their corresponding sections below.

| S.No. | Performance Test Type | Description | Business Case | Performance Testing Life Cycle Stage |

| 1 | Single User | It is conducted for assessing performance of the application when only single user is accessing the system. | It helps in improving the performance based on set of pre-existing rules for high performance web pages. | Performance testing life cycle is conducted during the script design phase or as a separate activity. For example, in case of web applications, tools like YSlow, HttpAnalyzer etc. are used for analyzing the performance of the application. |

| 2 | Contention | It is conducted for validating if application works perfectly when it is accessed concurrently. | Conducting this type of test at early stage of the development life cycles identify concurrency issues at initial stages and helps in setting up the environment for performance testing early rather than during release time. | It is conducted during script design phase of the performance testing life cycle, |

| 3 | Light Load | It is conducted for validating the performance characteristics of the system when subjected to workload / load volume much lesser than what is anticipated during the production load. | Conducting this type of test at last stage of development life cycles identifies easy to find performance issues early rather than during release time. | It is conducted as a smoke test during the performance testing life cycle |

| 4 | Load | It is conducted for validating the performance characteristics of the system when subjected to workload / load volume what is anticipated during the production load. | Conducting this type of test before releasing it to the market gives confidence and mitigates the risk of loosing business due to performance issues. | It is usually conducted as the very first performance run during the performance testing life cycle. |

| 5 | Stress / Volume | It is conducted to make sure that the application can sustain more load than anticipated on production. | This type of test is usually conducted once the system is on production but needs to be tuned for future growths. | It is performed if required during the performance testing lifecycle. |

| 6 | Resilience | It is conducted to make sure that the system is capable of coming back to initial state (from stressful state to load level state) when it is stressed for short duration. | This type of test is conducted usually once the stress / volume test is done for performance tuning. | It is performed if required during the performance testing lifecycle. |

| 7 | Failure | It is conducted to find out the capability of the application in terms of load. The load (concurrent users or volume) is increased until the application crashes. | This type of test is conducted to find out the number of days left for the business to resolve the performance issues in the application. | It is performed if required during the performance testing lifecycle. It might require multiple cycles of run for concluding anything. |

| 8 | Recovery | It is conducted to make sure that the application is able to heal itself when load is decreased from failure point to stress point and then to normal load. | This type of test is conducted to find out if application can be recovered quickly if there is an unexpected load to the system for some reason. | It is performed if required during the performance testing lifecycle. |

| 9 | Spike | It is conducted to find out the stability of the system when it is loaded in burst of very small time and releasing the load quickly. | For example – viewing real time replays of video streams (games) while there is a goal / wicket / six. | It is performed if required during the performance testing lifecycle. |

| 10 | Soak / Endurance / Reliability / Availability / Stability | It is conducted to find if system is capable of handling expected load without any deterioration of response time / throughput when run for a longer time. | Conducting this type of test before releasing to the market gives the confidence on availability / stability of the system. | It is conducted as the last run during the Performance Testing Lifecycle |

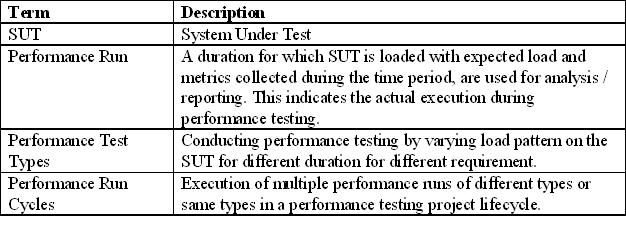

Terminology

Single User

‘Single user’ run is performed usually at the start of any performance run cycles or during the design time of the scripts. All application’s response time details should be calculated when it is accessed with only a single user. If the response time doesn’t match with the performance requirements then it is recommended to get it fixed before proceeding further for subsequent performance runs.

Technical Perspective

- Script verification during design time for single iteration

- Script verification during design for multiple iterations. For example, looking for correlations, caching etc.

- Identification of performance issues in the application when accessed by single user only. For example, in case of web application, various performance issues can be identified using utilities like YSlow, Fiddler, and HttpAnalyzer etc.

- The metrics calculated during ‘Single Run’ can be used as “Entry Criteria” for any performance testing project.

Business Perspective

Base lining the application end-2-end response time in terms of single user

Contention

‘Contention’ run is also performed during performance testing but it is mainly run during script design time rather than during the actual run cycles. The intention of this run is to find out any issues in the scripts related to synchronization. It is listed here as it is also an important type of run that needs to be considered during performance testing project lifecycle.

Technical Perspective

- Script automation verification during design time for concurrency. For example, identifying issues related to sessions etc.

- Script automation verification during design time for multiple iterations when accessed concurrently. For example, identifying issues related to caching etc.

- Preparation for parameterized test data.

Business Perspective

None, contention test is performed mainly during script designing phase.

Light Load

‘Light Load’ test is nothing but a smoke run in performance testing project. Usually performance testing run can last for many hours and finding that something has been missed at the end (for example – scheduling of performance counters collection) would be waste of many man-hours effort, it becomes really important to have a quick smoke run , fill the report with required data before proceeding further. The SUT on which ‘Light Load’ is conducted must contain all software and hardware components that will be used subsequently for later runs.

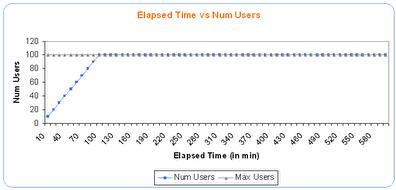

Number of concurrent users in the ‘Light Load’ can be calculated as number of concurrent users during non-peak hours or if the number during non-peak hours is negligible then 10% of the normal load in the application can be considered as ‘Light Load’, as shown in Fig-1 below.

If the performance of the application needs to be gauged in terms of volume (e.g. file upload size, database size etc.) rather than number of concurrent users then ‘Light Load’ test is run with 10% of the normal volume rather than with very high volume. For example, if normal loads need to be applied with database populated with one million rows initially then ‘Light Load’ can be performed on one lakh rows to start with and remaining nine lakh rows can be generated subsequently.

Technical Perspective

- Smoke run for finding issues related to test lab setup or any other missing details that might be required the actual run.

- Freeze on the performance requirement, metric collection and reporting structure.

- Finding out resource utilization level on all servers under SUT during non-peak hours.

Business Perspective

Identification of throughput and end-to-end response time during non-peak hours.

Load

Normally during performance testing, load test is one of the runs that is usually conducted from a business perspective. The metrics collected from this run can be used later for benchmarking / base lining perspective.

During this run type, workload, load volume and load patterns on application should be simulated as close as possible to anticipated load on production during normal / peak hours. It becomes very important to identify correct workload distributions with volume information for this run type. The workload distributions that need to be considered are –

- Scenarios frequencies

- Bandwidth (LAN, WAN, Dialup, Broadband etc.)

- Files upload sizes / file types / database sizes and other activities going on databases

- Maximum number of concurrent users in action

The other points that need to be considered are the duration of run and ramp-up / ramp-down strategy. If the ramp up / down is done very fast (example 250 users / min) then it might destabilize the system and that result might not be communicated / acceptable to the stakeholders. But at the same time if ramping up / down expected to be fast like during spike testing then it needs to be simulated accordingly.

Conducting load run for shorter time (say few minutes) might not provide the correct results. It is recommended to have performance run for at least half and hour with max concurrent users.

Following are few common examples of load patterns –

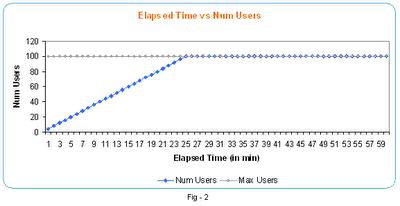

- Ramp up and stay at max users for few hours (Fig – 2)

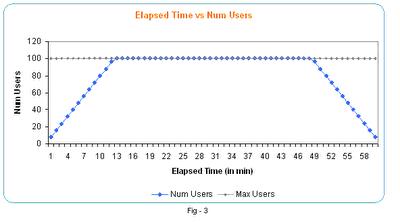

- Single hump, ramp up, stay at max users for few hours and then ramp down (Fig – 3)

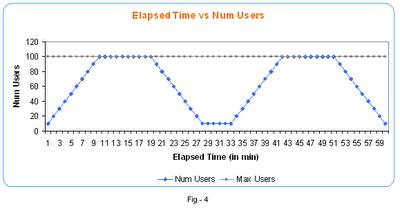

- Multiple humps during ramp up / ramp down (slowly) (Fig – 4)

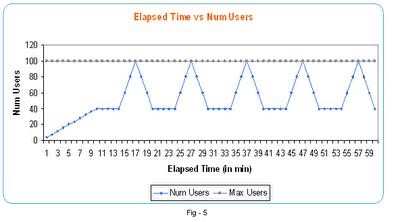

- Spikes, multiple ramp up / down within short interval of time (Fig – 5)

Performance run with single hump is sufficient most of the time. Ramping down is required to make sure that system releases resources accordingly when load is decreased.

Application stability, reliability, robustness etc. can be assessed with same workload and volume as applied in the Load run but it needs to be run for elongated period of time. This type of run has been kept under different category (Soak / Endurance testing) intentionally.

Technical Perspective

- Determining the application’s end-2-end response time, throughput, requests handling / min, error percentage and resource utilization at expected workload during normal / peak hours.

- Performing application tuning on regular basis taking load testing data as input.

- Identifying bottlenecks in the system in terms of resource utilization, throughput or response time.

- Establishing a baseline for future testing

- Determining compliances with performance goals and requirements.

- Comparing different system configurations to determine which works best for both the application and the business.

- Determining the application’s desired performance characteristics before and after changes to the software.

- Evaluate the adequacy of a load balancer.

- Detect concurrency issues.

- Detect functionality errors under load.

- Assess the adequacy of network components under desired load.

Business Perspective

- Assessing release readiness by taking informed decisions from performance related data collected.

- Improving the corporate reputation by improving end-2-end response time.

- Evaluating the adequacy of current capacity.

- Validating the performance SLA before rolling the application on production.

- Verifying that the application exhibits the desired performance characteristics within budgeted resource utilization constraints.

Stress / Volume

Stress run is conducted to make sure that application can sustain more load than anticipated on production presently. The load can be considered in terms of concurrent users or volume sizes or both. But it is always better to conduct stress test for concurrent users separately and in terms of volume separately. Some times, stress testing in terms of volume is also referred as ‘Volume’ testing.

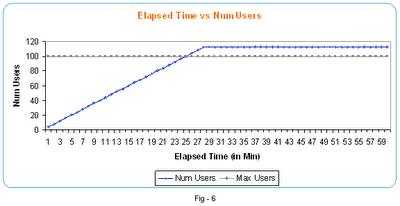

If details regarding numbers (number of concurrent users, volume size) related to stress testing is not known initially then it is recommended to stress the system with 10 percentages more load as shown in Fig – 6.

The stress testing is mainly done to find application’s capability of handling more loads on certain day / event. For example, handling of extra web traffic during Christmas week.

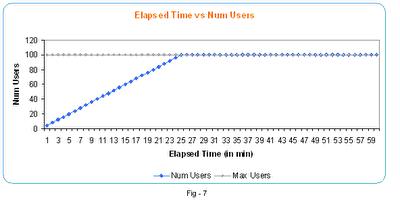

When stress testing is conducted in terms of volume then number of concurrent users are kept at normal load (as shown in Fig – 7) whereas only volume like file sizes, database sizes etc. are increased.

Technical Perspective

- Determining if response time can be degraded by over-stressing the system.

- Determining if data can be corrupted by over-stressing the system.

- Ensuring application functionality is intact when overstressed.

- Ensuring securities vulnerabilities are not opened by stressful conditions.

Business Perspective

Determining the availability and reliability of the application at load more than expected load.

Resilience

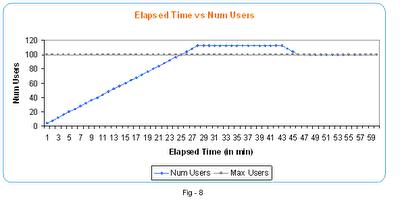

Resilience run is conducted to make sure that system is capable of coming back to initial state (from stressful state to load level state) when it is stressed for short duration (Fig – 8). For example, if an online store runs a discount for particular products for short time, say one hour during a day.

Technical Perspective

Finding it out if resources utilization, throughput and response time is coming back to previous state after application has been stressed for some time and loads come down to normal level.

Business Perspective

To identify resilience of the application.

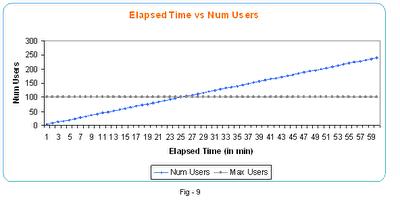

Failure

Failure run is conducted to find out the capability of the application in terms of load. The load (concurrent users or volume) is increased till the application crashes as shown in Fig – 9. It is always recommended to increase the load by 10 percentages. Multiple runs during this testing can be used for capacity planning. Failure run can also be used to find out how many more days current hardware configurations can be supported.

Technical Perspective

- Identification of concurrent users / volume sizes that can bring the system down.

- Collection of metrics for capacity planning purpose.

- Helps to determine how much load the hardware can handle before resource utilization limits are exceeded.

- Provides an estimate of how far beyond the target load an application can go before causing failures and errors in addition to slowness.

- Helps to determine what kinds of failures are most vulnerable to plan for.

- Provides response time trend

- Determining if data can be corrupted by over-stressing the system.

- Allow you to establish application-monitoring triggers to warn of impending failures.

Business Perspective

- To identify number of days the business has for upgrading the infrastructure / tuning the application.

- Determining the capacity of the application’s infrastructure

- Determining the future resources required to acceptable application performance.

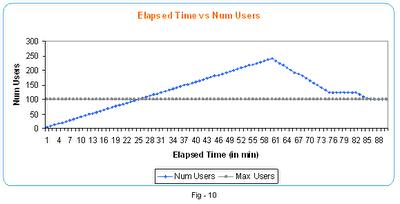

Recovery

Recovery run is done to make sure that the application is able to heal itself when load is decreased from failure point to stress point and then to normal load as shown in Fig – 10.

This run is in similar line as Resilience run but the difference is to first destabilize the system and then find out if it can be recovered quickly or not.

Technical Perspective

To find out if the application has the capability of self healing in terms of throughput, response time and resource utilization when load is decreased from failure point to normal expected load.

Business Perspective

To find out if application can be made available quickly in case there is failure because of un-expected surge of traffic one day.

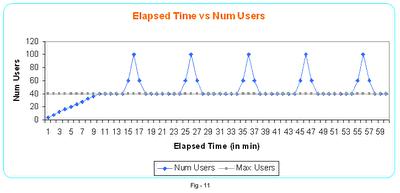

Spike

Spike run is performed on those cases where business expect load in burst of very small time and then comes down suddenly (See Fig – 11). For example – viewing real time replays of video streams (games) while there is a goal / wicket / six.

Spike run is different than load run with ‘spikes pattern’. In this run, spikes observed in the application are not normal rather it is extra load on the system during some specific system.

Technical Perspective

- Determining application’s capability of handling resources properly when stressed in spurts rather than linearly and slowly.

- Determining memory leak if there are any.

- Determining the issue of thrashing (Disk I/O) if there are any because of spikes.

Business Perspective

Ensure application remain available even when there are sudden surge of load in a very short span of time.

Soak / Endurance / Reliability / Availability / Stability

Soak run is performed usually at last stage of performance runs cycles to find out if the application is capable of handling expected load without any deterioration of response time / throughput when run for a longer time. The longer run duration can be associated with any performance run types as discussed above. The duration and run types are decided as per the requirements of the project.

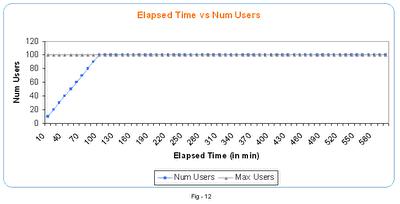

As per Fig – 12, the soak run duration is 10 hours and load pattern follows ‘Load Test Run’ type.

Technical Perspective

- Finding out memory leaks in the application if there are any.

- Finding out the robustness of the system in terms of hardware as well as third party soft wares when stressed for a long duration.

- Making sure resource utilization level is always consistent and under expected level while application is running for long duration.

- To verify if sessions are being handled properly for web based application or client – server application, when multiple concurrent users are accessing the application for longer duration.

- Finding out if file / disk storage capacity is sufficient

- Application that run during non-peak time period (e.g. anti-virus) do not degrade the performance of the system

Business Perspective

- Finding out if application is available 24*7.

- Finding out consistencies in end-2-end response time and throughput when application is up and running for longer duration.

- Determining the acceptability of stability.

Conclusion

One performance run is usually completed in 3-4 days time including the analysis and reporting. This however would be excluding workload analysis, script designing, test environment setup etc. which usually requires multiple runs (as discussed above) to validate the applications performance requirements / goals. The types of run are dependent upon the performance requirements.

References

- Performance Testing Guidance for Web Applications By J.D. Meier, Carlos Farre, Prashant Bansode, Scott Barber, Dennis Rea

- http://www.perftestplus.com/resources.htm

- The Art of Application Performance Testing By Ian Molyneaux